Rohit K. Vartak

Duke University | A want-to-be LLM researcher

Durham, North Carolina

United States, 27705

I am a second-year M.S. in Computer Science student at Duke University.

My research interests lie in robustness and reasoning in LLMs. I am particularly interested in understanding how models reason, improving that reasoning process, and ensuring that these models remain efficient and scalable in real-world settings.

I am currently advised by Prof. Bhuwan Dhingra at Duke, where I am exploring the mathematical reasoning capabilities of multimodal LLMs.

Previously, I interned at CERT Labs, working under Prof. Praneeth Vepakomma at Mohamed bin Zayed University of Artificial Intelligence (MBZUAI), where my focus was on improving the efficiency and scalability of large language models.

Additionally, I was advised by Prof. Monica Agrawal at Duke University, where I worked on studying and improving medical chatbots to enhance their accuracy and real-world usability.

Feel free to explore my work and reach out! 🚀

news

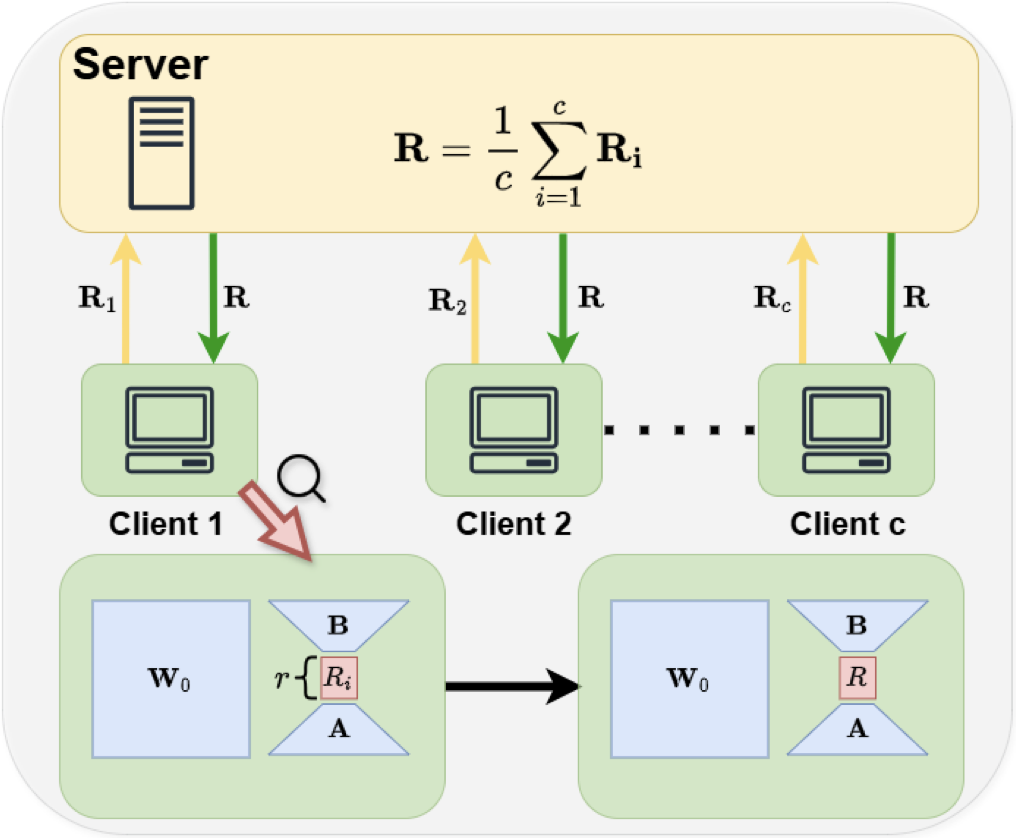

| Mar 04, 2026 | Excited to share that our work, “Fed-SB: A Silver Bullet for Extreme Communication Efficiency and Performance in (Private) Federated LoRA Fine-Tuning”! has been accepted at TMLR 2026🚀 |

|---|---|

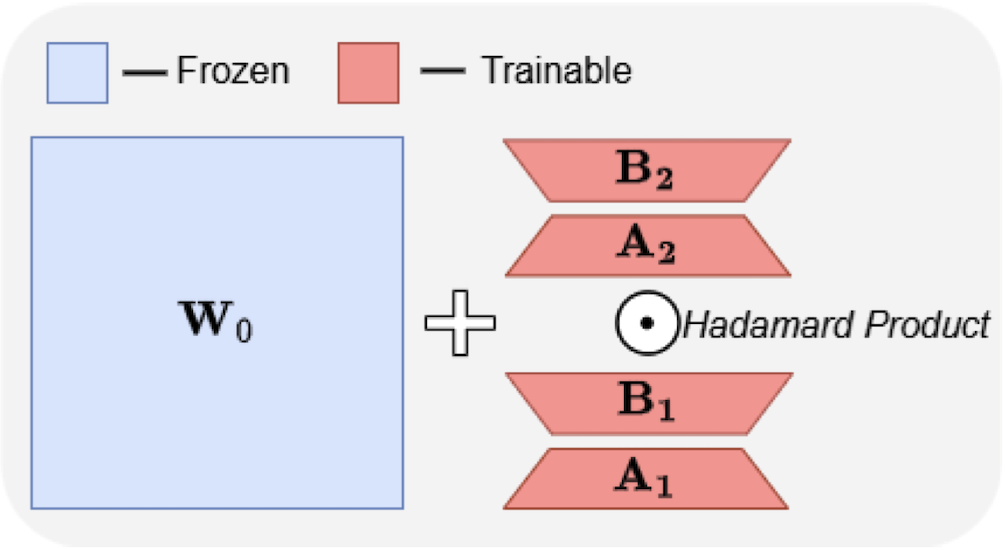

| Jan 20, 2026 | Excited to share that our work, “ABBA-Adapters: Efficient and Expressive Fine-Tuning of Foundation Models”! has been accepted at ICLR 2026🚀 |

| Jun 26, 2025 | Excited to share our latest work, ““What’s Up, Doc?”: Analyzing How Users Seek Health Information in Large-Scale Conversational AI Datasets”! Now available on arXiv🚀 |

| May 21, 2025 | 🚀 Just dropped: “ABBA-Adapters: Efficient and Expressive Fine-Tuning of Foundation Models” is now on arXiv |

| Mar 13, 2025 | Excited to share our latest work, “Fed-SB: A Silver Bullet for Extreme Communication Efficiency and Performance in (Private) Federated LoRA Fine-Tuning”! Now available on arXiv🚀 |